A lot has already been said about Assassin’s Creed: Unity and to some degree, gamers are right; Assassin’s Creed: Unity is a mess. It’s full of bugs and glitches. However, we should all admit how gorgeous this game actually looks. Assassin’s Creed: Unity is by far the most beautiful game with an amazing lighting system and some glorious Global Illumination effects.

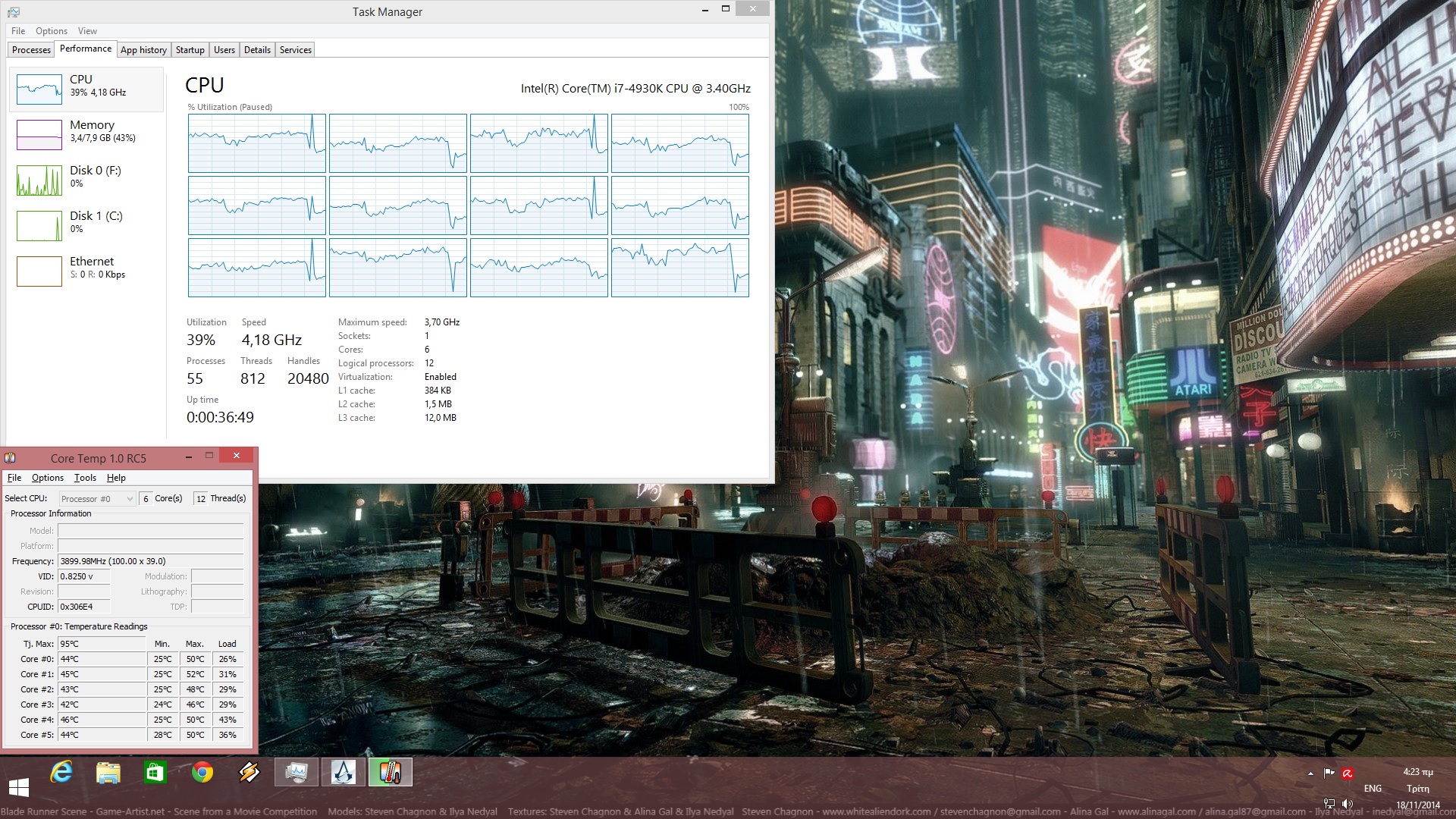

Assassin’s Creed: Unity is powered by the next iteration of the AnvilNext engine and as always, we used an Intel i7 4930K with 8GB RAM, NVIDIA’s GTX690, Windows 8.1 64-bit and the latest version of the GeForce drivers. NVIDIA has already included an SLI profile that offers amazing SLI scaling, however we witnessed some really frustrating stutters that happened randomly.

These stutters appeared in both SLI and Single-GPU modes, suggesting that there is something really ‘strange’ going on in the background. Most of the times, these stutters appear right after a cut-scene, making the game almost unplayable. During those stutters, all physics go crazy (and most noticeably the hair physics, resulting in THAT hairstyling AC joke that has been circulating the web over the last few days). Moreover, performance takes a big hit and mouse movement is as unresponsive as it can get. A simple workaround (that not always works) is to either restart your game or your machine whenever those stutters appear, however Ubisoft needs to address it as soon as possible.

In order to find out whether the game scales well on a variety of CPUs, we simulated a dual-core, a tri-core and a quad-core system. Assassin’s Creed: Unity scaled incredibly well on twelve threads, something that definitely impressed us. Still, we did not notice any performance differences between our hexa-core and our simulated quad-core systems. Moreover, enabling Hyper Threading did not bring any additional performance gains on our systems, despite the incredible scaling on those additional threads.

For what is worth, our simulated dual-core CPU (with Hyper Threading enabled) was able to push constant 40fps – even when there was a huge crowd – when SLI was enabled. In Single-GPU mode, our simulated dual-core was limited by the GPU, as we can see in the following screenshot. Still, the overall experience was better than the one witnessed on consoles (both in terms of framerate and visuals).

In short, Assassin’s Creed: Unity is mostly – surprisingly enough – a GPU-bound title. While a current-gen Intel dual-core (with Hyper Threading) will be enough for constant constant 40fps, a single GTX680 will be able to offer you constant 35fps (no matter what CPU you’re using). A current-gen quad-core is capable of pushing constant 60fps and there is no performance difference between a current-gen quad-core and a current-gen hexa-core.

With SLI enabled, we were able to enjoy an almost constant 60fps experience. Our framerate dropped at 50s when there was a huge crowd but most of the times, we were above 60fps. That was at 1080p with Very Ultra Environment Quality, High Textures, High Shadows, HBAO+, FXAA and Bloom enabled. Anything higher than that and we were limited by our VRAM. There was slight stuttering when we enabled PCSS shadows (though our GTX690 was able to handle them most of the times), and there were major stutters with Very High textures.

Scalability is another big issue for Assassin’s Creed: Unity, as we were not able to achieve constant 60fps on a single GTX680 even with the game’s lowest settings. As a result of that, we are almost certain that a number of PC gamers will face major performance issues, especially if they own weaker graphics cards. Ironically, Ubisoft was spot on with the game’s GPU Requirements. But does its visuals justify them?

Not in our opinion on its lower levels, but most definitely on its higher ones. Assassin’s Creed: Unity sports a similar crowd to the one found in the benchmark stress test of Hitman: Absolution. And that stress test ran with a minimum of 60fps on a single GTX680 at max settings. Obviously, Assassin’s Creed: Unity packs better lighting system (as well as additional Global Illumination effects and Physical Based Rendering) than Hitman: Absolution. On the other hand, Hitman: Absolution features a better and more demanding LOD system. Moreover, we are basically comparing a linear stage (Hitman: Absolution) with an open world game that packs ‘enterable’ buildings (Assassin’s Creed: Unity). Still, it’s quite obvious that a single GTX680 should be able to handle this title better than it currently does.

But then again, at its highest settings Assassin’s Creed: Unity is gorgeous. As we’ve already said, it is by far one of the best looking games out there. Thanks to the newly added Physically Based Rendering, the indoor environments look incredible, and with high amounts of AA this title can come close to CG-quality visuals. The fact that you can also enter most buildings without any annoying loading screens is a huge improvement over all other open-world games. Also, Ubisoft did a great job with the animations for almost all main characters, and Arno moves way better and smoother than all previous assassins. Given its open-world nature, you will be able to spot some low-res textures here and there (even with Very High textures enabled), but that’s understandable. Most main characters are highly detailed and are made of a high number of polygons. Of course that cannot be said about the game’s NPCs who look outdated and sometimes really ‘blocky,’ but then again that was also to be expected.

Still, there is one graphical feature that Ubisoft left unpolished and that’s no other than the ridiculously aggressive LOD system that has been used. In Assassin’s Creed: Unity, characters switch between their low and high models right in front of view, and buildings appear out of thin air on the horizon. Hell, Ubisoft did not even implement a fade-in/fade-out effect in order to smooth out the pop-in of objects and characters. Couple this awful LOD system with all sorts of bugs and glitches that plague Ubisoft’s title, and you get yourself a game that would benefit from a couple of months of additional development.

All in all, Assassin’s Creed: Unity is a mixed bag. At Very Ultra, the game looks phenomenal, especially if you enable the higher amounts of AA (but in order to do that you will need some really, really, REALLY powerful GPUs). At Low, the game feels like something that should be running with ease on GPUs like the GTX680. And while there are occasions when the game achieves CG-quality visuals, its awful LOD system makes it look ‘weird’ and ‘fuzzy’ in motion. And don’t get us started with all graphical its bugs and glitches.

Assassin’s Creed: Unity is one of the most beautiful games out there, but it is also one of the most demanding ones. PC gamers have been asking for titles that push their hardwares to their limits, and at its Very Ultra settings, Assassin’s Creed: Unity does exactly that. Moreover, Assassin’s Creed: Unity looks visually better than pretty much all current-gen games (surpassing visually even Ryse or Crysis 3, especially if you take account Unity’s open-world nature). If Ubisoft manages to resolve all the bugs and glitches, and further optimize it, we can safely start talking about a masterpiece. In its current state, however, Assassin’s Creed: Unity is the epitome of an unfinished and full-of-bugs game.

Enjoy!

Nothing is true, everything is permitted.

John is the founder and Editor in Chief at DSOGaming. He is a PC gaming fan and highly supports the modding and indie communities. Before creating DSOGaming, John worked on numerous gaming websites. While he is a die-hard PC gamer, his gaming roots can be found on consoles. John loved – and still does – the 16-bit consoles, and considers SNES to be one of the best consoles. Still, the PC platform won him over consoles. That was mainly due to 3DFX and its iconic dedicated 3D accelerator graphics card, Voodoo 2. John has also written a higher degree thesis on the “The Evolution of PC graphics cards.”

Contact: Email