Last week, Daedalic Entertainment released The Lord of the Rings: Gollum on PC. Powered by Unreal Engine 4, it’s time to benchmark it and see how it performs on the PC platform.

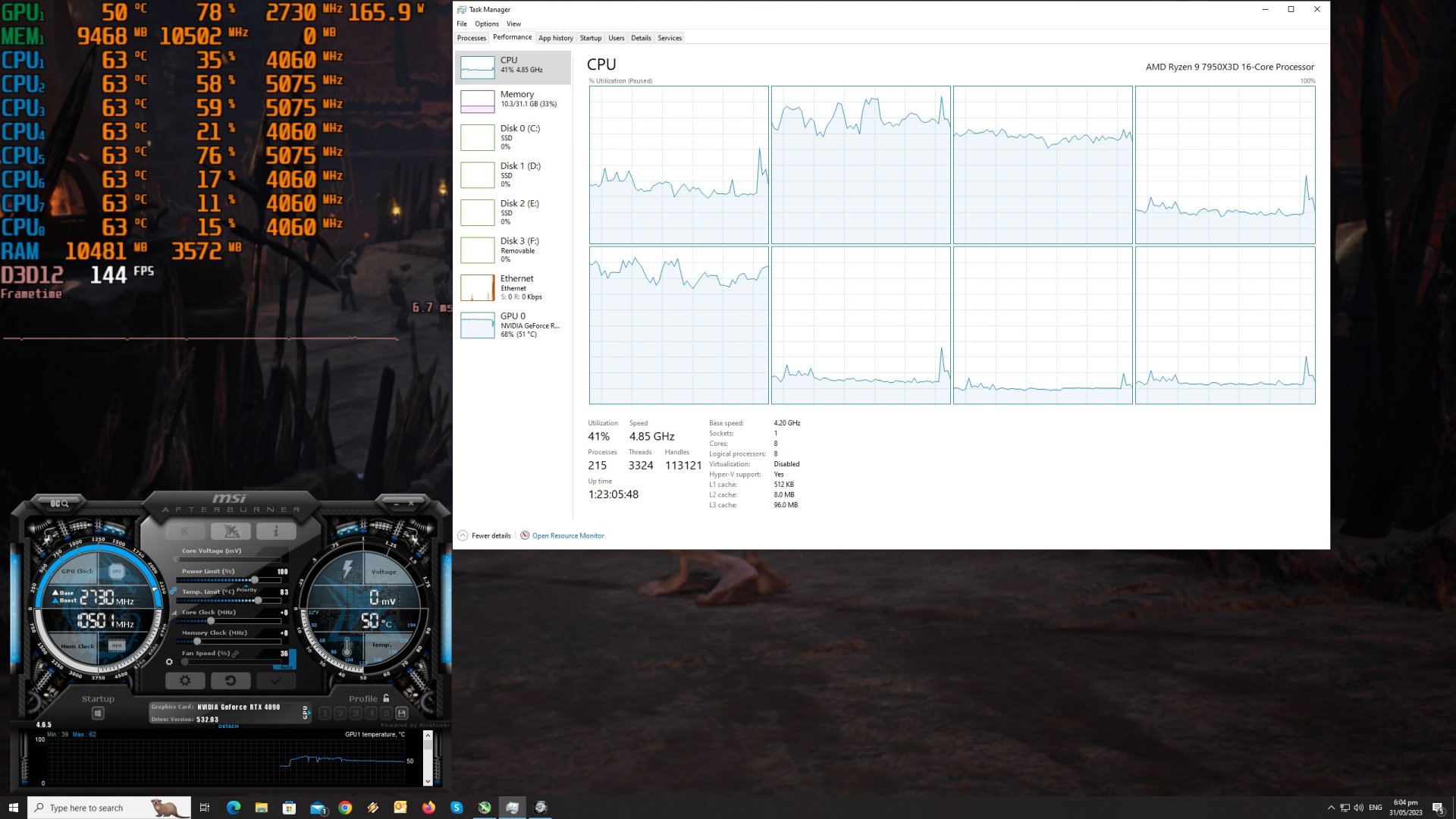

For this PC Performance Analysis, we used an AMD Ryzen 9 7950X3D, 32GB of DDR5 at 6000Mhz, AMD’s Radeon RX580, RX Vega 64, RX 6900XT, RX 7900XTX, NVIDIA’s GTX980Ti, RTX 2080Ti, RTX 3080 and RTX 4090. We also used Windows 10 64-bit, the GeForce 532.03 and the Radeon Software Adrenalin 2020 Edition 23.5.1 drivers. Moreover, we’ve disabled SMT and the second CCD on our 7950X3D.

Daedalic has added a few graphics settings to tweak. PC gamers can adjust the quality of Anti-Aliasing, Shadows, Textures, View Distance and Visual Effects. There are also settings for Gollum’s Hair, Texture Streaming, Ray Tracing, and DLSS 2/3. Unfortunately for AMD owners, the game does not support FSR 2.0 at launch.

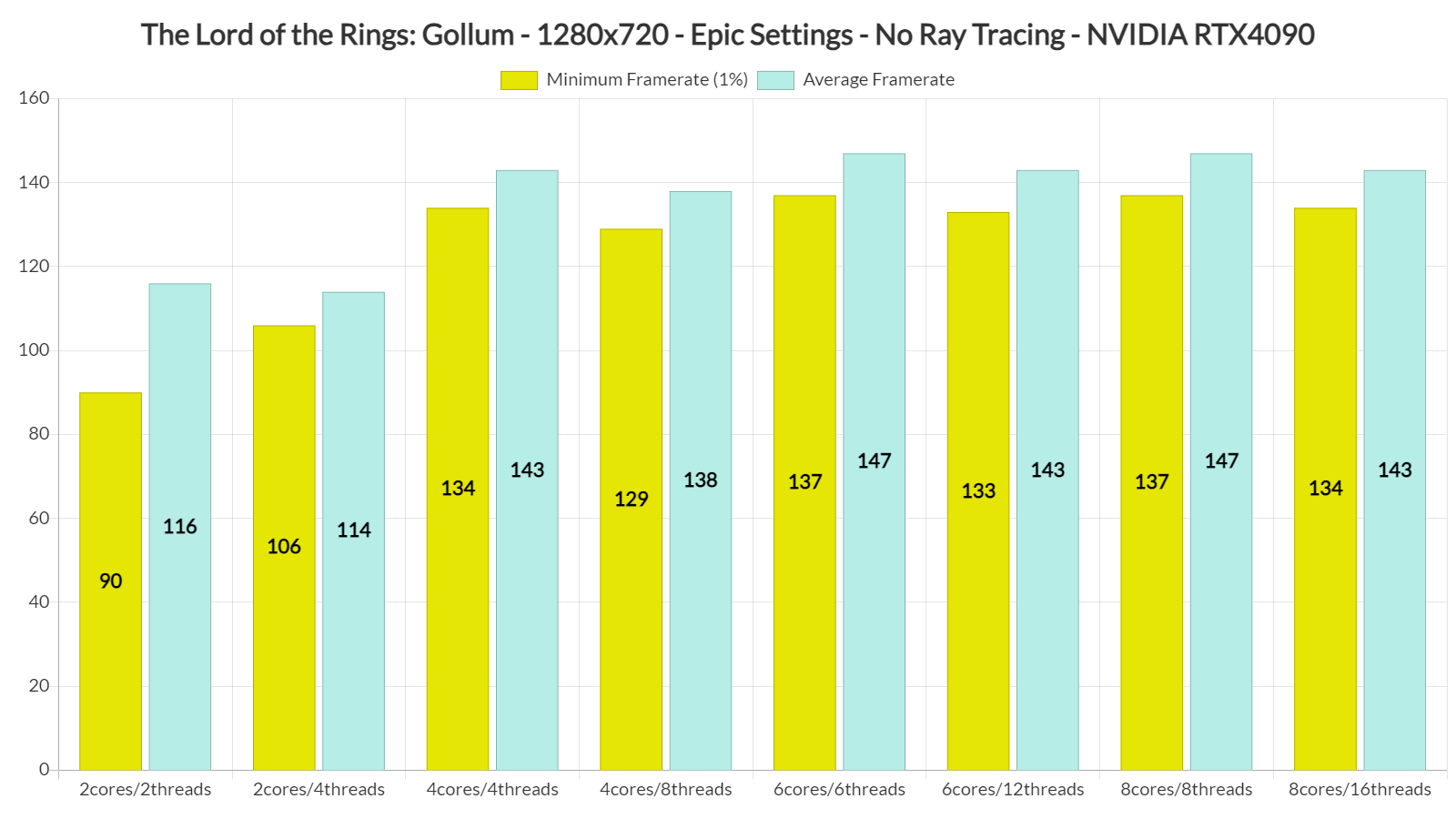

In order to find out how the game scales on multiple CPU threads, we simulated a dual-core, a quad-core and a hexa-core CPU. And contrary to most of the latest releases, The Lord of the Rings: Gollum does not require a high-end CPU. With SMT enabled, we were able to get framerates over 100fps at all times at 720p/Epic Settings/No RT. Without SMT, our minimum framerates were always above 90fps, however, we had severe stuttering issues.

Speaking of stutters, The Lord of the Rings: Gollum suffers from both traversal and shader compilation stutters. And although we don’t mind small spikes/stutters in most PC games, the stutters in Gollum are awful. Daedalic will have to address them as soon as possible because it isn’t currently possible to enjoy the game, even when gaming at really high framerates.

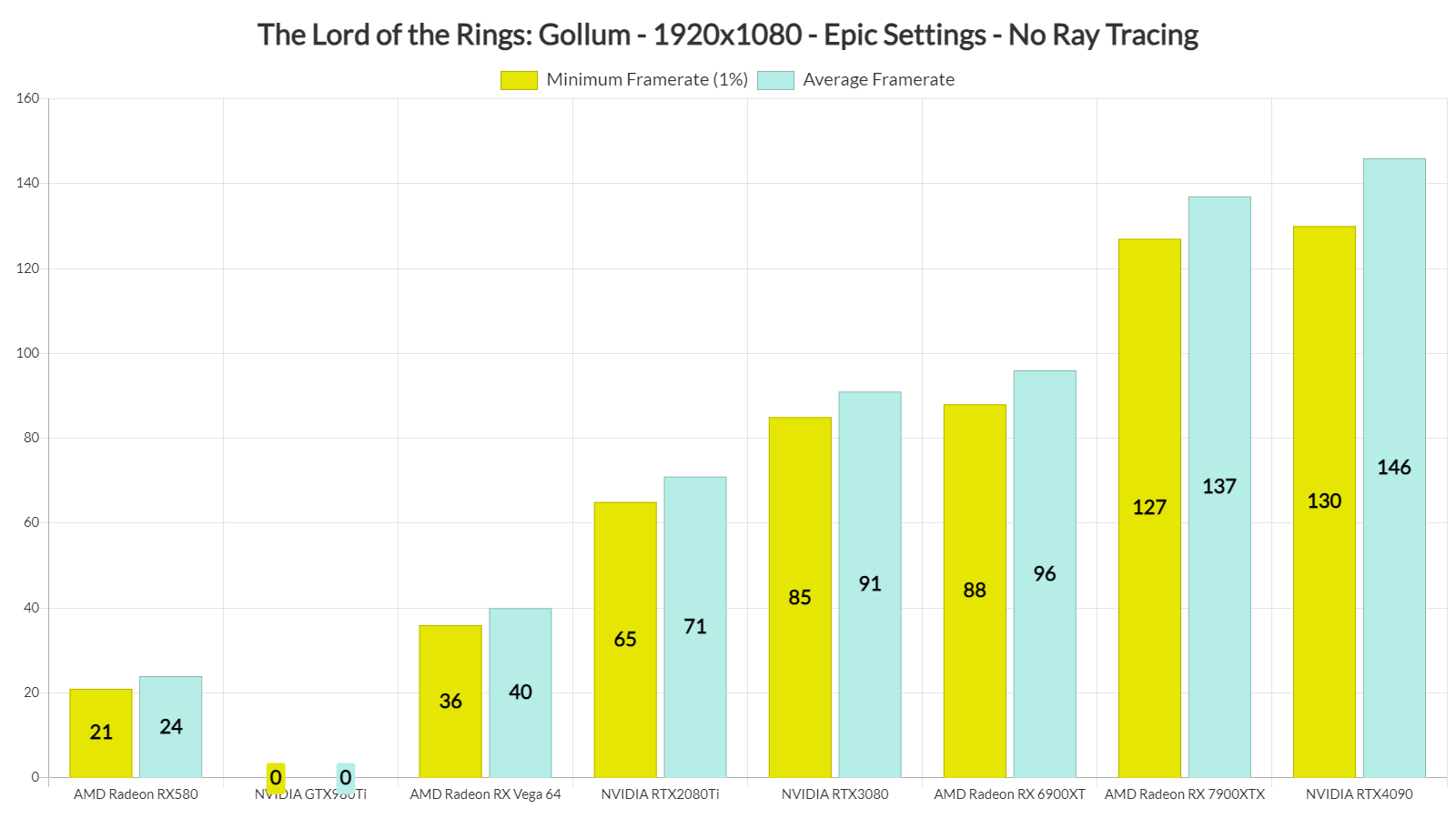

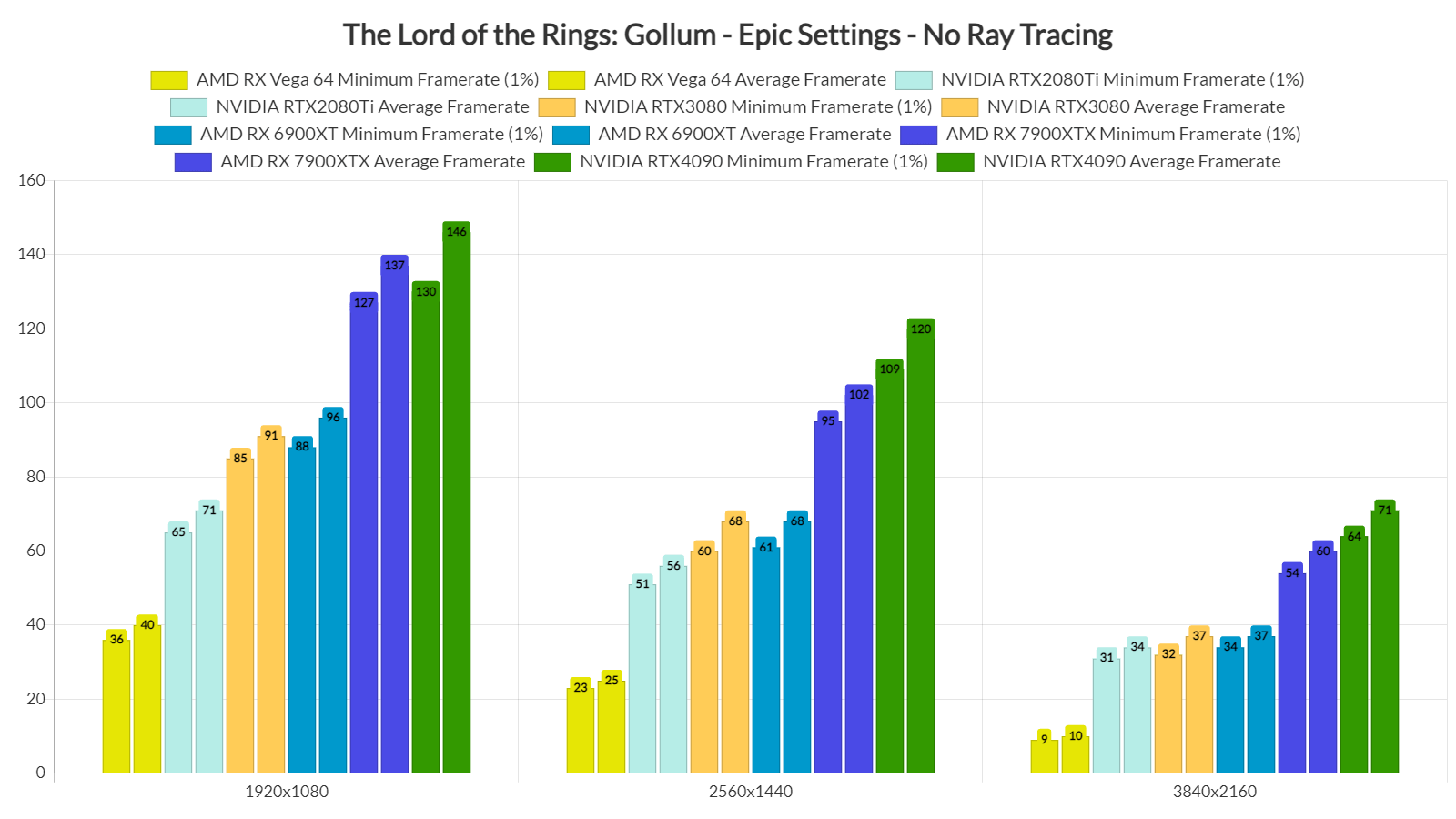

At Native 1080p/Epic Settings, you’ll need a GPU equivalent to the NVIDIA GeForce RTX2080Ti. Interestingly enough, the game was always crashing on our NVIDIA GTX980Ti. My guess is that GTX980Ti’s 6GB VRAM isn’t enough to even launch the game.

At Native 1440p/Epic Settings, the RTX3080 and RX 6900XT were struggling to run the game with over 60fps. As for Native 4K/Epic Settings, the only GPU that was able to run the game smoothly was the NVIDIA RTX 4090.

Those interested in some DLSS 2/3 and Ray Tracing benchmarks can read our previous article.

Graphics-wise, The Lord of the Rings: Gollum looks dated. There is literally nothing spectacular here, and the game does not justify its high GPU and VRAM requirements. The game would benefit from RTGI and RTAO. Instead of using these Ray Tracing effects, however, Daedalic has decided to use RT in order to enhance the game’s reflections and shadows.

All in all, The Lord of the Rings: Gollum is a big disappointment, and is the exact opposite of Dead Island 2. Dead Island 2 uses UE4 and shocked us with its graphics and overall performance. On the other hand, Gollum looks like an old-gen game, and performs way worse than DI2. Add to this the awful stuttering issues, and you got yourselves an awful PC game!

John is the founder and Editor in Chief at DSOGaming. He is a PC gaming fan and highly supports the modding and indie communities. Before creating DSOGaming, John worked on numerous gaming websites. While he is a die-hard PC gamer, his gaming roots can be found on consoles. John loved – and still does – the 16-bit consoles, and considers SNES to be one of the best consoles. Still, the PC platform won him over consoles. That was mainly due to 3DFX and its iconic dedicated 3D accelerator graphics card, Voodoo 2. John has also written a higher degree thesis on the “The Evolution of PC graphics cards.”

Contact: Email